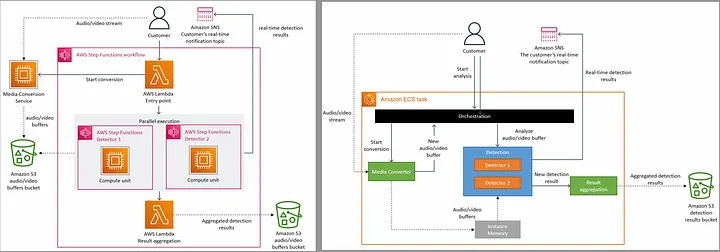

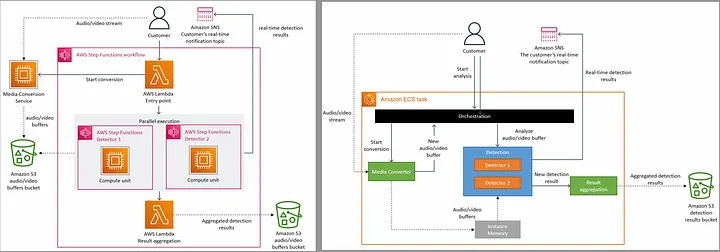

As per Prime Tech Video article from Marcin Kolny, Amazon has moved to monolith application from a Micro-service architecture. Below is the comparison of Old — micro-service / server-less architecture (left) vs Monolith architecture (right).

Architecture Comparison

Image Source: Prime Tech Video

Article Summary

Amazon VQA (Video Quality Analysis) team have an application to detect defects in live steam videos (Audio, Video, Frozen Frame …). The application initially was architected using distributed micro-service architecture. The application was decrypting the videos, performing media conversions of thousands of video frames and then analysing these converted video frames for quality checks and notifying users on stream quality issues.

However, with few thousand users, they had scaling bottlenecks and high operational cost for defect analysis. They have taken step back and looked at alternatives and decided to move to monolith architecture.

They moved from AWS Lambda(server-less), costly AWS tasks (workflow orchestrator), and intermediate S3 buffers to AWS ECS task, instance memory respectively. The heavy cost saving — 90%, is primarily because of thousand of video frames (+multiple copies) per live steam are now moved to instance memory and additionally saving on workflow orchestration costs.

The article emphasise on consideration of caching on media conversion was found to be expensive, (which I believe is true due to different devices, screen resolutions, supported formats, location of users etc).

However, I guess there could be further improvement to this with following,

Decrypt first and cache it first and, followed by media conversion.

However, there could decrypted the video and cached the decrypted content followed by media conversion.

Another thought: Take code to data

The media convertor, detection algorithm application could have been moved closer to each live stream instead of moving over the data.

Instead of storing the data into instance memory it could have been stored to EBS volumes which can be shared on multiple EC2 instances and taking the code to respective EC2 instances.

Takeaways

- One shoe does not fit everyone, hence before you deploy your application do proper analysis if it is suitable to micro-service, monolith or both (modulith).

- For data intensive applications, try to avoid moving/copying the data as much as possible to save on cost and network bandwith. I have personally seen this is even true for big data applications which has TB of data. It is often cheaper to move these kind of workloads to on-prem data centres.

- Caching? : On case by case basis it will cost more and degrade the performance as well. Choose wisely what to cache and what not to cache.

- Think out of box if make sense to take code to data instead of bringing data to code.

- Take cloud provider’s cost calculators into considerations to do cost analysis. In this case the cost was high due to both data movement and workflow orchestrations.

#LinkedInPowerPlayers #FractionalUnited #Fractional #CTO #Technology #Microservices #Monolith #Modulith